If you’ve ever had an aging parent or grandparent who struggles to live independently, you know how bittersweet it can feel.

On one hand, you want them to hold onto dignity and autonomy. On the other, you worry constantly about safety—missed medications, falls, loneliness.

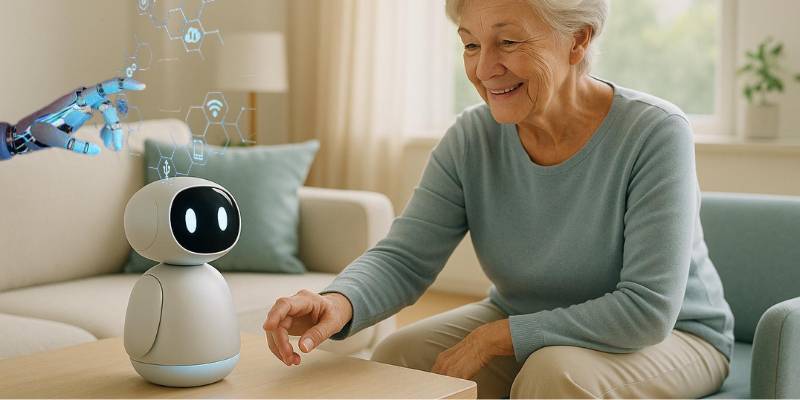

So when we hear that AI assistants can step in to help, the idea feels almost miraculous. Voice reminders, fall detection, instant communication with family or caregivers—what’s not to like?

But here’s the tricky part: that same device listening to help Grandma remember her blood pressure pill might also be quietly logging her habits, her voice, her location. Helpful? Yes. Safe? Not always. Respectful of privacy? That depends.

So the real question isn’t just whether AI assistants help the elderly. It’s whether they’re a net enhancement of independence—or a subtle invasion of privacy.

The Promise: Independence at the Touch of a Voice

Let’s start with the bright side.

For older adults, everyday life is filled with small hurdles that add up. Forgetting appointments. Struggling with new technologies.

Managing health conditions that require constant monitoring. AI assistants—whether in the form of smart speakers, wearable devices, or specialized care platforms—can take on some of that cognitive and logistical load.

Examples of Real Benefits

- Medication reminders: A 2019 study by the Journal of Medical Internet Research found that digital reminders significantly improved adherence rates among older adults managing chronic conditions (JMIR study).

- Emergency response: Some assistants connect directly to emergency services when unusual patterns are detected. Falls, in particular, are a huge concern—one in four Americans over 65 experiences a fall each year, according to the CDC.

- Social connection: Loneliness is a silent killer. The National Academies of Sciences, Engineering, and Medicine report that social isolation significantly increases the risk of premature death (NASEM report). AI assistants that facilitate quick calls or even light conversation may help soften that isolation.

So yes, the promise is powerful. Independence is preserved. Families sleep better knowing someone—or something—is “there.”

The Shadow Side: Surveillance in Disguise

Now, let’s look at the other side.

AI assistants often require constant listening. That means microphones are live, sensors are tracking, and data is being sent to servers. Companies promise this data is secure, but the track record isn’t reassuring.

Bloomberg revealed in 2019 that human contractors were listening to snippets of Alexa recordings to improve accuracy.

Many users had no idea this was happening. That’s not just a glitch—that’s a breach of trust.

For the elderly, who may not understand privacy settings or consent forms buried in legal jargon, the risk is even greater.

Their homes, which should be sanctuaries, risk becoming nodes in the sprawling network of surveillance capitalism.

And this leads to my hot take on Are AI Assistants a Gateway to Surveillance Capitalism?: absolutely yes, unless we step in with stronger safeguards.

Emotional Stakes: Why This Isn’t Abstract

Here’s where I get personal.

My own grandmother, who lived alone for years, would’ve loved a device to remind her to take her arthritis meds or to call me when she couldn’t find the phone.

But she was also deeply private. The idea of “something listening” in her home would have horrified her.

That tension—the pull between independence and privacy—is not theoretical. It’s lived, emotional, and often painful.

For many families, it’s a daily negotiation: how much do we trade privacy for peace of mind?

From Simple Tasks to Emotional Terrain: A Deep Dive into From Scheduling Meetings to Therapy Sessions

What began as glorified calendars is morphing into something much deeper. Assistants aren’t just about productivity anymore.

Some now provide therapy-like interactions, offering comfort or mindfulness guidance.

For seniors, that might sound like companionship. But here’s the catch: it’s companionship without authenticity.

Machines can mimic empathy, but they can’t feel it. The risk of emotional dependency looms large.

And if those sessions are recorded and analyzed? The intimacy of therapy—arguably one of the most private human experiences—turns into another data point in a corporate dataset. That’s when “independence enhancer” crosses the line into “privacy invader.”

Money Matters: Who Pays for Independence?

Another overlooked angle is cost. Some AI assistants are affordable, but the most advanced health-monitoring devices often come with steep subscription fees.

That raises equity concerns. Should only wealthier seniors have access to life-enhancing AI tools? Or should this become a covered part of healthcare, subsidized by insurance or Medicare?

Here’s where regulation and policy come into play. If governments view AI assistants as public health assets, costs could be lowered. If left entirely to corporations, seniors in lower-income brackets may be excluded.

The Education Angle: Everything About The Future of AI Assistants in Education You Should Know

You might wonder, what does education have to do with elderly care? Surprisingly, quite a lot.

Lessons from AI assistants in schools—bias, equity, dependence—mirror what we’re seeing with seniors. If assistants misinterpret non-native accents in classrooms, imagine the consequences for elderly immigrants in hospitals.

If privacy is mishandled with children’s learning data, why expect better for seniors’ medical data?

That’s why everything about the future of AI assistants in education you should know is also relevant here. Both groups—kids and seniors—are vulnerable. Both deserve protections.

Politics and Neutrality: Understanding Do AI Assistants Have Political Bias? Testing Their Neutrality in a Divided World Trends

Here’s a curveball: should we worry about political influence in AI assistants used by seniors?

It may sound far-fetched, but think about it. Many older adults rely on their assistants to read the news aloud, summarize political debates, or provide voting information. If the assistant has even subtle political bias, it could influence perceptions and choices.

Studies already show that large language models reflect biases from their training data. In a politically divided world, seniors—who may trust their devices as neutral—are especially at risk of manipulation.

So yes, understanding whether AI assistants have political bias is not just an academic exercise. It’s about protecting democracy, too.

Stories That Bring It to Life

I once read about an older man in Florida who used his assistant daily for reminders after a stroke. It gave him confidence to live alone.

But then he discovered it had recorded parts of private conversations and stored them. His sense of safety was shaken—not just in the device, but in his own home.

These stories matter because they move the debate from statistics to human lives. Seniors aren’t data points. They’re people navigating dignity, fear, and hope.

Regulation: Where Do Governments Fit In?

So, should governments regulate AI assistants in elder care? Probably yes.

Potential areas for regulation:

- Privacy standards: Limit what data can be collected, and ban resale to advertisers.

- Accessibility guarantees: Ensure devices are affordable and user-friendly for seniors.

- Transparency mandates: Make privacy policies clear and simple enough for non-technical users.

- Bias testing: Regular audits to ensure accuracy across languages, accents, and cultural backgrounds.

This isn’t about stifling innovation. It’s about protecting the most vulnerable while still letting technology evolve.

Looking Ahead: A Balancing Act

The future isn’t going to be black or white. AI assistants will likely continue to offer seniors invaluable independence while also posing new risks.

The key is balance. Families need to weigh trade-offs, companies need to design responsibly, and governments need to step in where markets fall short.

Most of all, we need to listen to seniors themselves. What do they want? Some may gladly trade privacy for independence.

Others may prioritize dignity over digital convenience. Their voices, not just ours, should guide the conversation.

My Personal Conclusion

So, are AI assistants for the elderly independence enhancers or privacy invaders? Honestly, both.

My personal opinion is that they can be transformative tools if—and only if—we set clear boundaries around privacy, cost, and transparency.

Without those safeguards, they risk becoming silent invaders in the very homes they’re meant to protect.

I believe we owe our elders more than just technology. We owe them dignity, choice, and trust. And AI assistants, if done right, could help us deliver all three.

Closing Thought

In the end, this debate isn’t really about technology. It’s about humanity. How much do we value the independence of our elders? How much do we respect their privacy? And how do we design systems that honor both?

Because if we don’t ask those questions now, we risk building a future where convenience wins—but dignity loses.