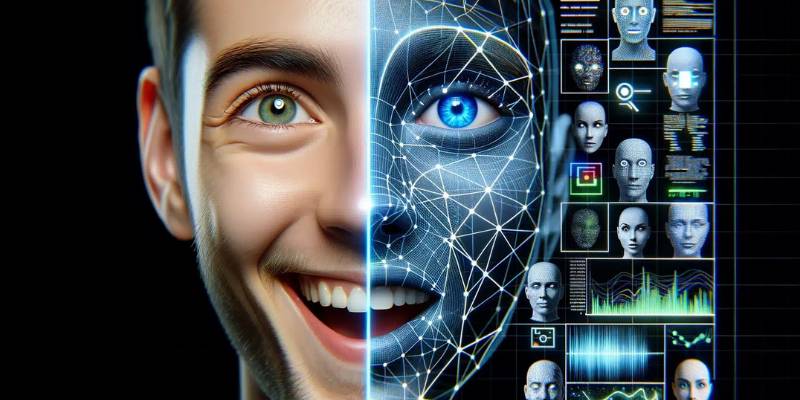

There’s something almost unsettling about looking at an AI-generated portrait and feeling like the subject is looking back at you.

The tilt of the head, the subtle curve of the lips, even the faint tension around the eyes—details that suggest not just anatomy but emotion. And yet, deep down, I know this “person” doesn’t exist.

So, here’s the question I keep circling: if AI can mimic the look of a smile or the sorrow of a tear, does that mean it can truly capture human emotions? Or is it all surface—an echo of what we, as humans, project onto pixels?

In this article, I want to dig deep into this dilemma. I’ll explore how AI photo generators work, what they get right and what they get wrong, how professionals are responding, and why this debate isn’t just about technology but about our relationship with authenticity itself.

Why Emotion Matters in Images

Think about the photos that have stayed with you the longest. Chances are, they weren’t the technically perfect ones.

They were the messy, emotional shots—a friend laughing until they cried, a stranger at a protest with fire in their eyes, or a family photo where everyone’s expressions tell their own story.

Emotion is what makes photography powerful. It’s what turns an image from decoration into memory, from pixels into something that sticks in your chest.

That’s why the question of whether AI can capture emotion matters so much. Because if it can’t, then its artistic ceiling is lower than many claim.

How AI Photo Generators Mimic Emotion

Let’s get technical for a moment. AI doesn’t “feel” emotions—it doesn’t even understand them. What it does is recognize patterns.

Trained on billions of images, it learns what happiness “looks like”: teeth showing, eyes crinkled, cheeks lifted. Sadness? Drooping eyelids, downturned mouth, shadows around the face.

When prompted—“a portrait of a joyful woman”—the model assembles those visual cues into a new image. To us, it looks like joy. To the machine, it’s just data correlations.

This raises an uncomfortable but fascinating question: if the illusion of emotion is convincing enough, does it matter that it’s not “real”?

Photographers Divided Over View

I’ve had conversations with professional photographers, and the responses are fascinatingly split. On one hand, some argue that photography has always been about manipulation. Lighting, posing, even Photoshop—all tools that bend reality.

From this perspective, AI is just the next step, and its ability to create emotional resonance is valid, even if synthetic.

But others are adamant: you can’t fake the electricity of a real moment. They’ll tell you about the seconds after a bride sees her partner at the end of the aisle, or the raw exhaustion on a photojournalist’s subject’s face. To them, AI can mimic, but it can’t feel.

In short, there are photographers divided over view: is AI enhancing artistry, or is it hollowing out the very essence of it?

Realism vs. Creativity: View

One of the big tensions here is realism vs. creativity: view. Some people use AI photo tools to push boundaries—dreamlike images, surreal settings, emotions exaggerated for artistic effect. Others want hyperrealistic portraits, indistinguishable from traditional photography.

Neither is wrong, but the expectations are different. If you’re commissioning a creative fantasy artwork, emotional exaggeration can be part of the charm. If you’re looking for a family portrait, that uncanny perfection can feel… cold.

Personally, I find myself torn. I love the imaginative freedom AI allows, but when it comes to realism, there’s always something slightly “off” for me. Maybe it’s the symmetry that feels too perfect, or the gaze that doesn’t quite carry warmth.

The Rise of AI-Powered Trends

It’s worth noting that emotional mimicry isn’t just an artistic quirk—it’s part of the broader ai-powered trends shaping visual culture.

Marketing campaigns are already using AI-generated faces because they test just as well with audiences as real ones. In some industries, people can’t even tell the difference.

According to a 2022 study published in PNAS, participants were unable to reliably distinguish between AI-generated and real faces, often rating the AI ones as more trustworthy.

That’s huge. If synthetic emotions can persuade, influence, and sell, then AI doesn’t need to “feel” to be effective. It just needs to look convincing enough for us to believe.

Where the Illusion Breaks Down

And yet… there are cracks in the façade.

- Microexpressions: Humans are astonishingly sensitive to tiny facial movements. AI can approximate them, but subtle timing—the milliseconds between surprise and laughter—often gets lost.

- Contextual Emotion: Real emotion doesn’t exist in isolation. It’s tied to events, relationships, and stories. AI can draw a tear, but it can’t give it the weight of the moment that caused it.

- Consistency: In multi-image sets, AI sometimes struggles to keep emotional continuity. A subject smiling warmly in one shot may look eerily detached in the next.

For casual viewers, these gaps might not matter. For those attuned to emotional nuance, they can be jarring.

Ethics Focus: Manipulating Emotions

Here’s where things get thorny. If AI can create believable emotional imagery, what are the ethical implications?

An ethics focus is critical because emotional imagery is incredibly persuasive. Political campaigns could fabricate “photos” of candidates in emotional situations.

Advertisers might use synthetic children smiling or crying to trigger parental instincts. Journalists could blur the line between reporting and fabrication.

The danger isn’t hypothetical—it’s already happening. In 2023, fake AI images of global leaders circulated during political crises, sparking outrage before they were debunked.

So while AI’s ability to mimic emotions can be creatively liberating, it also raises urgent questions about consent, authenticity, and manipulation.

What Audiences Say

Do viewers actually care if the emotion is real? Interestingly, surveys suggest a split.

- A 2024 poll by Morning Consult found that 47% of Americans said they were uncomfortable with AI-generated images being used in advertising.

- Yet, when asked if they could tell whether an image was AI-generated, only 34% felt confident.

This contradiction highlights the paradox: people want authenticity, but they often can’t detect inauthenticity. That makes trust both more fragile and more crucial.

My Experience With AI Emotion

On a personal note, I’ve spent hours experimenting with AI portraits. Sometimes, the results stop me in my tracks. A gaze that feels thoughtful. A smile that feels warm. For a moment, I find myself almost believing.

And then I remind myself: these aren’t memories. They aren’t people. They’re simulations. And while I love them as creative expressions, I feel a pang of sadness thinking about how easily they could be mistaken for real.

I’ve also noticed something strange. The more I look at AI faces, the more I start scrutinizing real ones. Almost like my brain is recalibrating what “real” should look like. And that unsettles me more than I’d like to admit.

The Future of AI Emotion in Photography

So where does this all lead? I think we’re heading toward a few likely outcomes:

- Hybrid Use: Photographers will combine real emotion with AI refinements—removing distractions, enhancing expressions, or even generating supplemental visuals.

- Disclosure Norms: Labels like “AI-assisted” may become as common as “Photoshop retouched.”

- Cultural Shifts: Younger generations, raised with AI, may care less about whether an emotion is authentic and more about whether it resonates.

But the bigger question remains: as AI gets better at simulating emotions, will we become desensitized to real ones? Or will authenticity become even more prized in response?

Conclusion: Illusion vs. Truth

So, can AI photo generators really capture human emotions? My honest answer: they can imitate them convincingly, but they can’t originate them. They’re like mirrors reflecting our expectations back at us—powerful, persuasive, sometimes beautiful, but ultimately hollow.

And maybe that’s okay, as long as we remember the difference. A simulated smile may sell a product, inspire a story, or even move us. But the messy, unpredictable, vulnerable emotions of real humans? Those still belong to us.

The danger isn’t that AI will feel. The danger is that we’ll stop noticing whether anyone actually does.