I’ll be honest—when I first heard about text-to-video AI, my initial reaction was: no way. Turning a written prompt into moving pictures that look like a film?

It sounded like magic, or maybe a clever hoax. But then I saw demos from companies like Runway, Pika Labs, and OpenAI.

Suddenly, a sentence like “a medieval knight riding a dragon through the clouds” transformed into short clips that looked eerily close to fantasy movie trailers.

Of course, the clips weren’t perfect. Sometimes the dragon had too many wings, or the knight’s armor flickered like bad CGI.

But even with those flaws, the idea was breathtaking: type a line of text, and watch it become a video.

So how do these models actually work? What’s happening under the hood? That’s the rabbit hole I want to take you down—part explanation, part reflection, and a lot of wondering about what this means for art, storytelling, and maybe even our sense of reality.

The Basics: How AI Generators Function

Let’s start with the foundation. When we say “text-to-video AI,” what we’re really talking about are large machine-learning models trained on enormous datasets of images, videos, and text.

At their core, here’s how AI generators function in this context:

- Understanding the text: The system first takes your prompt and runs it through a language model, breaking down the meaning and context. For example, “a golden retriever surfing on a beach at sunset” is parsed into objects (dog, surfboard, beach, sunset), relationships (dog on board, board on water), and styles (sunset lighting).

- Mapping to visuals: The AI then predicts what those elements should look like by drawing on its training data of millions of images and videos.

- Generating frames: Using diffusion models (a method that refines noisy data into clear images) or transformers, the system starts generating frame after frame.

- Making it move: Here’s the hard part. Unlike static images, video requires temporal consistency. The dog has to look like the same dog in frame one and frame fifty, and the waves need to move smoothly. That’s where advanced architectures and motion models come into play.

The result? Short clips—sometimes just a few seconds long—that simulate reality or imagination, depending on your prompt.

It’s messy, it’s computationally expensive, and it’s far from perfect. But the scaffolding is remarkable.

Why This Is Harder Than Images

People sometimes ask: if text-to-image AI works so well already, why is video such a big leap?

Here’s why:

- Temporal coherence: An image is a single moment. A video is hundreds of frames. Getting them to line up smoothly without flickering is incredibly difficult.

- Physics and motion: In video, gravity, shadows, and momentum have to make sense. A bouncing ball can’t suddenly morph into a cube mid-jump.

- Scale: The amount of data required is exponentially larger. Training on billions of still images is one thing; doing the same for moving footage is another beast entirely.

That’s why many current text-to-video models are limited to just a few seconds. They’re essentially generating a burst of text-to-video predictions rather than full-length films. But even those few seconds are enough to show what’s possible.

A Step-by-Step Walkthrough

To make this more concrete, imagine you type: “A snowy mountain landscape, cinematic style, with a lone wolf howling at the moon.”

Here’s what happens behind the scenes:

- Prompt parsing: The words “snowy,” “mountain,” “wolf,” “howl,” “moon,” and “cinematic style” are extracted.

- Visual grounding: The model searches its learned representations—mountains from nature documentaries, wolves from wildlife footage, the look of “cinematic cutscenes” from movie datasets.

- Frame initialization: It begins with noise and gradually refines each frame using diffusion.

- Motion smoothing: A temporal model predicts how the wolf should move between frames, ensuring the animation looks continuous.

- Stylistic overlay: The system applies shading, lighting, and cinematic framing, giving the final output that polished “film-like” feel.

The clip you see may only last five seconds, but in those seconds, hundreds of micro-decisions are being made by the model.

Model-Powered Editing: Beyond Generation

One of the most exciting (and sometimes overlooked) aspects of this technology isn’t just generating video from scratch—it’s editing.

Think about model-powered editing like this: you upload a rough video of yourself presenting a pitch.

The AI can change the background to a sleek office, adjust your clothing color, or even sync your lips to a different script. It’s Photoshop for moving pictures, only smarter and often more intuitive.

This is why filmmakers, advertisers, and even educators are buzzing about the possibilities. Instead of spending thousands on reshoots, you can type a prompt and let the AI adjust the footage.

But of course, that power comes with its own set of ethical landmines—which I’ll get to shortly.

The Cinematic Angle: Cinematic Cutscenes

Gamers will know this term well. Cinematic cutscenes are those scripted video sequences in games that advance the story between gameplay sections.

They’ve always been resource-intensive, requiring animators, voice actors, and designers.

Now imagine generating those cutscenes with text prompts. A game developer could type: “Hero enters a ruined cathedral, stained glass broken, rain falling through the ceiling,” and the AI could instantly produce a sequence.

It’s not hard to see why studios are watching this space carefully. If text-to-video AI becomes robust enough, it could lower costs dramatically while opening new creative possibilities.

Where the Data Comes From

Here’s a sticking point: how do these models learn?

Most are trained on massive datasets scraped from the internet—videos, captions, images, and metadata. That raises a few concerns:

- Copyright: If an AI model learned from thousands of Hollywood films, does generating a clip in “cinematic style” infringe on those works?

- Bias: Internet data reflects societal biases. AI models can replicate stereotypes or exclude underrepresented groups.

- Transparency: Many companies don’t fully disclose their datasets, leaving users in the dark.

So while the outputs are dazzling, the inputs are ethically messy.

Current Limitations

It’s worth grounding the hype in reality. As of now, text-to-video AI models often struggle with:

- Consistency: Characters morphing mid-clip.

- Hands and faces: Just like early image models, these are notoriously glitchy.

- Complex prompts: The more elements you add, the harder it is to keep them coherent.

- Length: Most clips are only a few seconds. Long-form storytelling remains out of reach.

So no, we’re not at the point where AI can generate a two-hour film indistinguishable from Hollywood. But the trajectory suggests we may not be far off.

The Emotional Side of Watching AI Video

Here’s where I want to shift from technical to emotional.

When I first watched an AI-generated video of a surreal cityscape melting into clouds, I felt awe, but also unease.

It was beautiful, but uncanny. My brain knew it wasn’t filmed by a human, and that knowledge colored the experience.

That tension—between wonder and discomfort—seems to be a hallmark of this moment. We’re impressed, but also wary. Excited, but also anxious about what this means for art, labor, and truth itself.

The Stats: Market Momentum

If you’re wondering how fast this is growing, the numbers tell the story.

- The global AI video generation market was valued at $411 million in 2023 and is projected to reach $1.7 billion by 2028 (MarketsandMarkets).

- A 2022 survey by Wyzowl found that 91% of businesses already use video as a marketing tool, with demand for cheaper and faster production methods rising each year (Wyzowl).

- Gartner predicts that by 2026, 20% of all marketing content will be generated by AI, including video.

The business case is undeniable. Whether we like it or not, text-to-video is coming fast.

Ethical and Creative Dilemmas

The rise of this tech raises questions we can’t ignore:

- Deepfakes: How do we prevent malicious use, like fake political speeches?

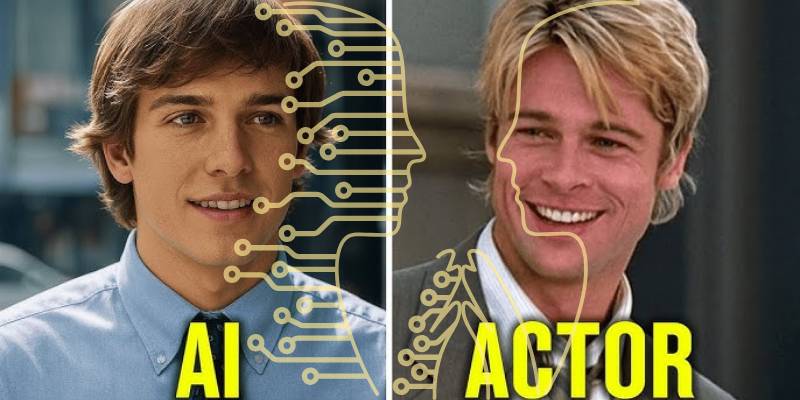

- Job displacement: What happens to videographers, editors, and actors if AI takes over?

- Artistic authenticity: Does a film made by prompt engineering have the same value as one shot by a human crew?

These aren’t easy questions. And honestly, we’re only at the beginning of wrestling with them.

A Personal Opinion

If you ask me: are text-to-video AI models soulless? My answer is complicated.

On one hand, they’re breathtaking tools. They democratize creativity, letting anyone bring visions to life without Hollywood budgets. On the other, they risk flattening art into algorithmic predictability.

To me, the sweet spot will be collaboration. Let machines handle the heavy lifting—the labor-intensive rendering, the model-powered editing, the translation. Let humans bring the soul—the story, the emotion, the risk.

That balance feels like the only way forward.

The Future Ahead

So where does this leave us?

I suspect we’ll see text-to-video AI evolve rapidly in the next few years, moving from 5-second clips to longer sequences, from surreal dreamscapes to realistic narratives.

Eventually, yes, we may get to the point where full-length films can be generated from text prompts.

But whether those films will feel the same as human-made art is another matter entirely. That’s where the human element—our imperfections, our stories, our empathy—will still matter most.

Closing Reflection

So, explained simply: text-to-video AI works by predicting and stitching together frames based on prompts, powered by massive models trained on oceans of data. It’s both dazzling and flawed, revolutionary and unsettling.

And while the technical side is fascinating, the emotional and ethical questions are even more pressing.

Because at the end of the day, this isn’t just about algorithms. It’s about how we tell stories, how we see ourselves, and how we decide what counts as real.

The technology will keep improving. The bigger question is: will we, as humans, keep asking the right questions alongside it?