The mood in Washington has shifted. The White House has announced plans to cut back regulations on artificial intelligence, aiming to boost innovation while keeping an eye on national security and public trust.

It’s a calculated gamble — one that could either spark an AI renaissance or light a fire the administration struggles to control.

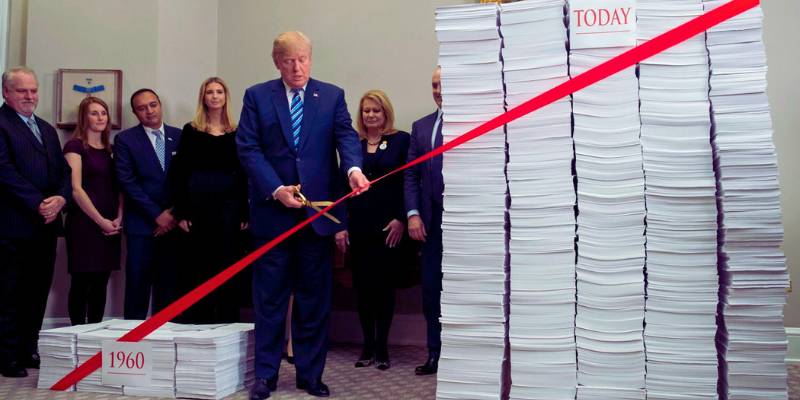

The latest statement from the administration lays out a plan to accelerate AI research, streamline oversight, and ease what it calls “unnecessary compliance barriers,” as reported in Corporate Compliance Insights.

The proposal, part of what’s being called America’s AI Action Plan, takes direct aim at what officials describe as “burdensome state-level restrictions.”

Essentially, Washington wants to stop the patchwork of AI laws sprouting across states before it turns into a regulatory nightmare. That’s a big deal — imagine trying to comply with fifty different definitions of what “AI accountability” means.

A deeper breakdown of this policy shift and its broader implications surfaced in a detailed review published by Morrison Foerster, noting how the federal government is seeking to establish national consistency in how AI is governed.

But there’s a twist. While the U.S. is dialing back, others are tightening up.

In Europe, the EU’s Artificial Intelligence Act has already laid down one of the toughest regulatory frameworks in the world, focusing on risk classification, transparency, and accountability.

A recent analysis by Tech Policy Press highlighted that European regulators are now entering the enforcement phase — precisely the opposite direction the White House seems to be moving. It’s almost poetic: two global powers, two wildly different playbooks.

Some experts believe this deregulation push could supercharge sectors like healthcare, manufacturing, and defense — areas where slow approvals and ethical reviews have reportedly delayed innovation.

In a feature from HIMSS News, analysts argued that trimming procedural delays might allow medical AI systems to reach patients faster, potentially saving lives.

Still, there’s a darker undercurrent — looser rules also mean greater risk of untested models making real-world decisions without sufficient oversight.

There’s a human side to this too. A senior policy aide quoted in Reuters mentioned that “AI can’t thrive in a fog of fear,” but quickly added that “unchecked freedom brings its own shadows.”

That’s the tightrope the U.S. is walking now — trying to stay globally competitive without triggering another data-privacy scandal or misinformation storm.

And with the 2026 election cycle looming, you can bet the political consequences of getting AI wrong are already weighing on policymakers.

From my own perspective, it’s a bit of a double-edged sword. Sure, no one likes red tape — but some of it exists for good reason.

I remember how fast generative AI exploded last year: deepfakes, fake voices, doctored news clips. If there’s anything we’ve learned, it’s that technology doesn’t wait for ethics to catch up.

We’ve seen India roll out its own AI labelling mandate to fight deepfakes, according to a recent report from The Economic Times.

Maybe the U.S. could borrow a page from that playbook instead of burning the rulebook altogether.

Still, I get the logic. If America wants to lead, it can’t afford to choke its innovators with endless forms and audits.

The question is: will cutting rules unleash creativity, or chaos? I’m leaning toward cautious optimism — but only if transparency and accountability don’t get lost in the shuffle.

One thing’s certain: this moment marks a turning point in how the world’s biggest tech player plans to manage its machines.

And whether we end up celebrating a golden age of AI or cleaning up its messes years later, we’ll remember this as the day the U.S. decided to take the brakes off.