AI has dazzled us with its ability to create video content faster, cheaper, and sometimes more creatively than traditional methods. I’ll admit, the first time I saw an AI-generated video that looked convincingly human, my jaw dropped. It was like watching the future arrive in real time.

But here’s the uncomfortable truth: for every jaw-dropping demo, there’s an undercurrent of concern. AI video is not just a technological marvel; it’s also a Pandora’s box.

Beneath the innovation lies a tangled mess of ethical dilemmas, fake media debate, risks to trust in video content, the potential for brand scandals, and the often-overlooked hidden costs of AI.

So, what happens when the very medium we’ve always trusted as “proof” becomes unreliable? What happens when brands chase efficiency and land in controversy?

What happens when the hidden environmental and cultural costs of AI video surface? This is what I want to explore here—not to fearmonger, but to face the darker side head-on.

Chapter 1: The Allure of AI Video

Before diving into the shadows, it’s worth acknowledging the light. AI video technology has made content creation accessible to small businesses, educators, and individuals who could never afford a production crew. Tools like Synthesia, Runway, and Pictory can turn a block of text into a video in minutes.

And in a world where 91% of businesses already use video as a marketing tool (Wyzowl 2023 survey), efficiency is irresistible. The global AI in media market is projected to hit $99.48 billion by 2030 (Grand View Research), which speaks volumes about demand.

But the same tools that democratize creativity also democratize deception. And that’s where things get tricky.

Chapter 2: The Fake Media Debate

This is the elephant in the room: the fake media debate.

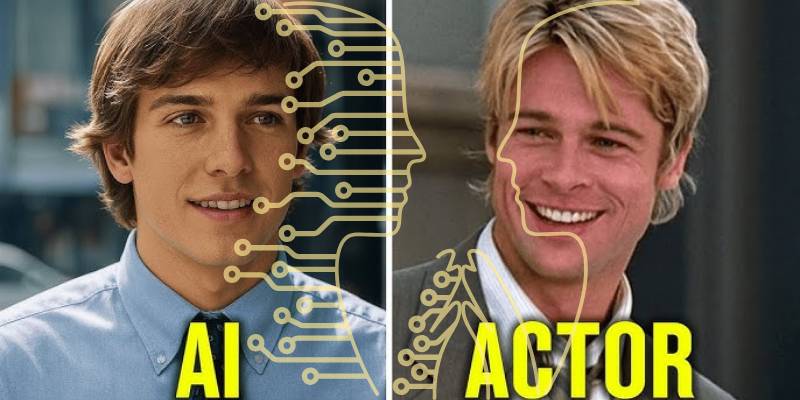

We’ve all grown up with an implicit trust in video. Photos can be staged, words can be twisted, but video—video feels like proof. AI smashes that assumption.

With deepfake technology, you can make a politician say something they never said or put a celebrity in an ad they never agreed to.

According to a Pew Research study, 64% of Americans believe altered videos and images create significant confusion about current events. And if people start doubting every video they see, we’re not just talking about entertainment—we’re talking about trust in journalism, politics, even personal relationships.

The debate isn’t just “is it possible?” (we know it is). It’s “what does society lose when video is no longer reliable?”

Chapter 3: Trust in Video Content

Which leads directly into the issue of trust in video content.

If AI-generated videos proliferate unchecked, public trust may erode further. Think about it: in court cases, video evidence has long been considered gold standard. In advertising, testimonials filmed on camera create authenticity. On social media, raw video clips carry weight.

But what happens when people assume every video might be fake? The damage isn’t just abstract. It’s personal. Victims of AI-generated harassment or revenge porn already face the impossible task of proving what’s real and what’s fabricated.

I find this terrifying because it chips away at one of our most basic human instincts: believing our eyes.

Chapter 4: Brand Scandals

It’s not just individuals at risk—companies are too.

AI video promises to save brands money and time. But when misused, it can spiral into brand scandals.

Imagine a company launching an ad with an AI-generated spokesperson, only for consumers to discover the person doesn’t exist. Or worse, using AI to recreate the likeness of a real person without consent.

There have already been cases where brands were criticized for using AI art instead of paying human creators. Extend that outrage to video, and the fallout could be brutal. Consumers are savvy, and authenticity matters more than ever.

A 2023 Ipsos survey found that 62% of consumers distrust brands that rely too heavily on AI without transparency. That’s not a minor risk—that’s a reputation killer.

Chapter 5: The Hidden Costs of AI

Beyond the obvious risks, let’s talk about the hidden costs of AI.

- Environmental impact: Training large AI video models consumes staggering amounts of energy. A 2019 MIT report estimated that training a single large AI model could emit as much carbon as five cars over their lifetimes. With AI video models multiplying, the environmental footprint can’t be ignored.

- Job displacement: Editors, actors, voice-over artists—whole creative industries face disruption. While AI can lower costs for businesses, it risks sidelining thousands of human workers.

- Cultural homogenization: AI models are trained on existing data. That means they often reproduce the same tropes, aesthetics, and cultural biases, narrowing rather than expanding creative diversity.

These costs don’t appear on a company’s balance sheet, but society pays the bill.

Chapter 6: Misinformation at Scale

Misinformation isn’t new, but AI video supercharges it. Short, snackable clips spread faster than fact-checks can catch them. On platforms like TikTok or YouTube, an AI-generated fake can reach millions before being flagged.

Imagine the consequences in an election cycle or during a global crisis. The “liar’s dividend” becomes real—the idea that even authentic videos can be dismissed as fake simply because fakes exist. That undermines accountability itself.

It’s chilling to think about how fragile our information ecosystem already is, and AI video is like tossing a grenade into the middle of it.

Chapter 7: Where Regulation Stumbles

Some governments have started drafting rules. California and Texas, for example, passed laws restricting malicious deepfakes in elections and pornography. Platforms like YouTube and Facebook claim to remove misleading AI videos.

But enforcement is spotty, and technology always outpaces legislation. By the time a regulation is drafted, AI has already evolved.

My opinion? We need international frameworks, not just state or platform policies. Otherwise, bad actors will simply exploit loopholes across jurisdictions.

Chapter 8: Why Audiences Still Crave Authenticity

Despite the risks, here’s a comforting thought: people still value the real thing. Raw, unpolished, even messy human content resonates in ways AI can’t replicate.

That’s why TikToks filmed in bedrooms sometimes outperform glossy campaigns. That’s why documentaries shot on shaky cameras can move us more than CGI epics.

Audiences are not passive. They can sniff out inauthenticity, and they will push back against brands or creators who overuse AI. Which makes me think: maybe the real safeguard isn’t just regulation, but consumer demand for honesty.

Chapter 9: Case Studies—The Dark Side in Action

- Political deepfakes: In 2020, a Belgian political party used an AI-generated video of President Trump to spread climate change messaging. Even though it was satirical, many viewers believed it was real.

- Non-consensual harassment: Studies show that 96% of deepfake videos online are pornographic, overwhelmingly targeting women (Deeptrace, 2019).

- Corporate missteps: Smaller brands have faced backlash for using AI-generated influencers or ads without disclosure, sparking debates about deception.

Each case highlights how the dark side isn’t theoretical—it’s already here.

Chapter 10: My Personal Take

I’ll be honest. I’m fascinated by the artistry of AI video, but unsettled by its misuse. I love the idea of students creating documentaries with no budget, or small businesses making ads without hiring a production crew. That democratization matters.

But I can’t shake the unease. Every time I see a hyper-realistic AI video, a voice in my head whispers: What happens when I can’t trust what I see anymore?

In my view, the only path forward is balance. Use AI where it enhances, but insist on transparency, consent, and accountability. Without those, the cost is too high—not just financially, but socially and emotionally.

Chapter 11: Future Outlook

What’s next? A few scenarios:

- Short-term (2–3 years): Explosion of AI video in marketing, alongside growing consumer skepticism.

- Medium-term (5–10 years): Major brand scandals tied to undisclosed AI use. Expect lawsuits, regulations, and public apologies.

- Long-term (10+ years): A cultural reckoning—society redefining what “evidence” and “authenticity” mean in the age of synthetic media.

Whether this outlook leans positive or negative depends not on technology, but on how we use it.

Conclusion

AI video is a marvel, but it’s also a minefield. Behind the excitement lurks the fake media debate, the erosion of trust in video content, the looming threat of brand scandals, and the sobering hidden costs of AI.

The dark side isn’t about whether AI is inherently bad. It’s about the consequences of unleashing powerful tools without guardrails.

So my advice? Don’t reject AI video outright—but don’t embrace it blindly either. Treat it as a tool, not a truth. Be transparent, be ethical, and most of all, be human. Because in a world where fakes look real, the rarest commodity may be honesty.