A few years ago, most of us thought of deepfakes as quirky internet experiments—funny face swaps or surreal mashups.

Fast forward to now, and the conversation has turned much heavier. Deepfake videos are no longer just memes; they’re raising thorny ethical, legal, and cultural questions.

Every time I see a new clip circulating online, I ask myself: am I watching something harmlessly creative, or am I witnessing the slow unraveling of our trust in video itself?

The ethics of deepfake videos is not a niche debate anymore. It touches politics, entertainment, journalism, personal privacy, and the very concept of truth in media.

And while the technology itself is neutral—it’s the intent and use that matter—the risks and consequences are too big to ignore.

Chapter 1: What Exactly Are Deepfake Videos?

Let’s ground ourselves.

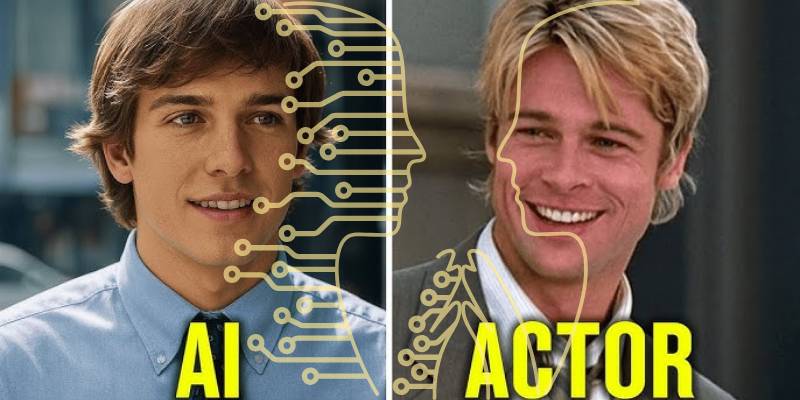

Deepfake technology uses artificial intelligence—specifically, deep learning models like Generative Adversarial Networks (GANs)—to manipulate or generate video content.

This can mean anything from swapping a celebrity’s face into a movie scene, to making a politician appear to say something they never said.

The realism is shocking. A 2022 MIT study showed that viewers could only identify deepfakes correctly about 65% of the time—barely better than a coin flip. That statistic alone should give us pause.

Chapter 2: The Virtual Actors Controversy

One of the biggest ethical battlegrounds right now is the virtual actors controversy.

Studios and advertisers are experimenting with AI-generated performers who don’t exist in real life—or worse, resurrecting deceased actors digitally. Remember the buzz when a young Carrie Fisher appeared in Rogue One? That was only the beginning.

On one hand, these tools allow creative storytelling, like bringing historical figures “back to life” for educational purposes. On the other, they raise disturbing questions: Did the actor consent?

Who controls their likeness after death? What happens to working actors if studios can endlessly recycle digital doubles instead of hiring humans?

This isn’t a hypothetical anymore—it’s an industry-wide reckoning.

Chapter 3: Fake News and Manipulation

Here’s where things get scarier.

Deepfakes are powerful tools for fake news and manipulation. A convincing video of a political leader saying something inflammatory could spread like wildfire before fact-checkers catch up. Even if it’s later debunked, the damage is done.

The Brookings Institution warns that deepfakes could “erode public trust, manipulate elections, and destabilize democracies.” In a world where misinformation already travels faster than the truth, deepfakes add gasoline to the fire.

Personally, I find this terrifying. We’ve always relied on video as “proof.” What happens when that proof is no longer reliable?

Chapter 4: Copyright Law and AI

Now let’s tackle the elephant in the room: copyright law and AI.

Who owns a deepfake? The person whose image was used? The creator of the algorithm? The platform hosting it? U.S. copyright law was not designed for this reality.

In 2023, the U.S. Copyright Office ruled that AI-generated works without human authorship can’t be copyrighted. That leaves a gray area where anyone’s face can be borrowed, altered, and redistributed, with little recourse for the person being imitated.

Until the law catches up, we’re left with patchwork protections, and in my opinion, a system that tilts unfairly against individuals while benefiting corporations and tech platforms.

Chapter 5: Authenticity in AI Media

This leads us to a deeper cultural issue: authenticity in AI media.

Do audiences care if something is “real” as long as it’s entertaining? Social media trends suggest that authenticity still matters—people crave raw, unfiltered content as much as polished productions.

But if AI-driven fakes flood the landscape, will authenticity become a luxury good, something only a few can guarantee?

Here’s my personal worry: if the line between real and artificial blurs too much, cynicism might replace curiosity. Imagine living in a media environment where everything is suspect, and nothing can be trusted at face value. That’s not just exhausting—it’s corrosive.

Chapter 6: Harms to Individuals

The most immediate ethical impact isn’t political or cultural—it’s personal.

Deepfakes have been weaponized in harmful ways, most notoriously in non-consensual explicit content. According to a 2019 report by Deeptrace, 96% of deepfake videos online were pornographic and overwhelmingly targeted women.

This isn’t about art or parody—it’s exploitation. And it leaves real people, often without resources or legal recourse, dealing with reputational and emotional trauma.

That alone should force us to take this technology’s misuse seriously.

Chapter 7: Benefits and Ethical Uses

It’s not all doom and gloom. Like any technology, deepfakes also have ethical applications:

- Education: Bringing historical figures to life in classrooms.

- Accessibility: Translating videos into different languages with accurate lip-sync.

- Entertainment: De-aging actors for flashback scenes or enabling creative experiments.

- Satire: Political parody that’s clearly marked as such.

The point isn’t to ban the technology altogether—it’s to draw firm boundaries between ethical and unethical use.

Chapter 8: Regulation and Responsibility

So who’s responsible for managing deepfakes? Governments? Tech companies? Audiences?

- Governments: Some states, like California and Texas, have passed laws banning malicious deepfakes in elections and pornography.

- Platforms: YouTube and Facebook have pledged to remove misleading deepfakes, though enforcement is spotty.

- Users: Media literacy campaigns encourage viewers to question what they see.

I believe we need a layered approach. Relying on one group—whether governments or platforms—won’t work. It’s going to take cooperation, transparency, and constant vigilance.

Chapter 9: Synthetic Reality and Trust

Deepfakes are part of a broader trend toward “synthetic reality,” where AI blurs the boundaries between the real and the artificial.

This raises a fundamental question: in a world where anything can be faked, what does “truth” even mean?

If video can no longer serve as evidence, society will have to rethink standards of proof in journalism, law, and everyday communication. And that, to me, feels like one of the biggest cultural shifts of our lifetime.

Chapter 10: My Personal Take

I’ll be honest: I feel conflicted.

On one hand, I’m amazed by the technical artistry of deepfakes. The precision, the sheer creativity—it’s impressive. On the other, I’m unsettled by how easily that same creativity can be twisted into harm.

For me, the ethical path is not to reject the technology outright but to insist on transparency and accountability. Deepfakes should be clearly labeled. Consent should be non-negotiable. And laws should protect individuals from exploitation.

Because at the end of the day, ethics isn’t about what’s possible. It’s about what’s right.

Chapter 11: Looking Ahead

The future of deepfakes will likely be a mix of innovation and regulation. Expect:

- More virtual actors controversy as studios push boundaries.

- Tighter laws addressing copyright law and AI.

- Rising public demand for authenticity in AI media.

- Continued battles against fake news and manipulation.

The question isn’t whether deepfakes will shape our future—they already are. The question is whether we’ll shape them responsibly, or let them erode the very foundations of trust.

Conclusion

Deepfake videos are a technological marvel and a cultural minefield. They embody the best and worst of AI innovation: creative potential on one side, manipulation and harm on the other.

From the virtual actors controversy to fake news and manipulation, from copyright law and AI to the fragile promise of authenticity in AI media, the stakes are too high to ignore.

And my personal stance? Let’s embrace the ethical uses while fighting fiercely against the abuses. Because the future of media—and perhaps even democracy itself—depends on whether we can keep truth alive in an age where the line between real and fake has never been thinner.